Tim Clifford

|

Nov 17, 2021

|5 min read

Search Topic

When the concept for Lagoon was originally founded back in 2016, the fundamental aim was “to make developers’ lives easier.” Since its inception, Lagoon has grown to become the Swiss army knife tool for application delivery onto Kubernetes clusters, removing the need to actually understand how to use Kubernetes itself. Lagoon sits between your git repository and a Kubernetes cluster to build, deploy, and manage your applications entirely from pre-defined YAML configuration (‘.lagoon.yml’). Although Lagoon takes a lot of the infrastructure hassle away, as an open-source tool it still provides developers with the freedom to build solutions that integrate with the technology stacks they work with.

One of our customers, Synetic, showcases this integration wonderfully in the implementation of their development CI/CD system. Due to Lagoon’s versatility, they were able to build a system that works for their business case and their own development workflow.

Lagoon’s versatility empowers development teams to build the workflows and tools they need.

Synetic is a digital agency based in The Netherlands that has been building fantastic digital solutions since 2004. Recently they demoed their impressive automated continuous deployment system inside GitLab with us.

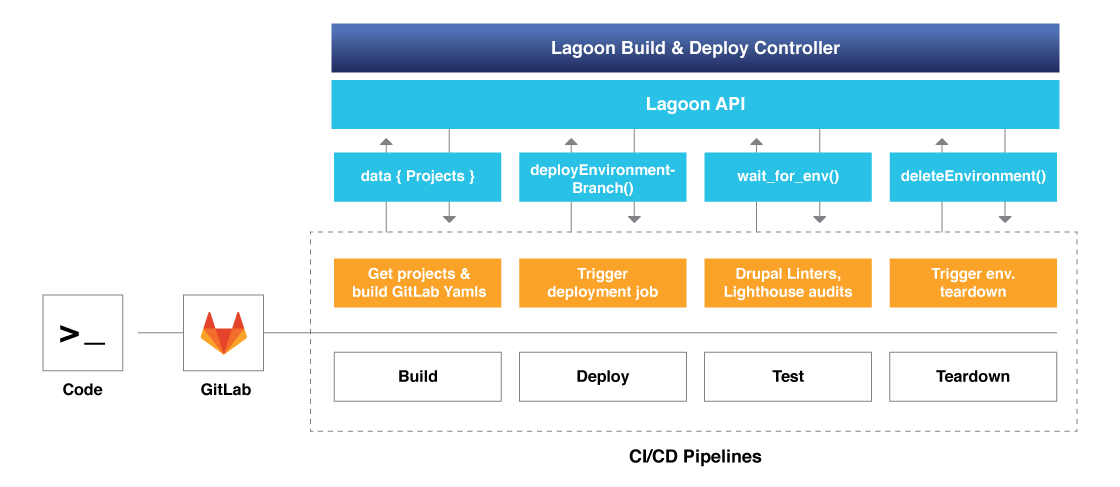

As they use Lagoon to build and deploy their websites, they wanted a way to be able to use GitLab pipelines to control this process automatically. Their intentions were to have a solution that would ensure their pipeline jobs were consistently reliable at every step in their CI/CD workflow.

GitLab makes use of runners that run executors (in this case Docker containers) to perform Synetic’s configured pipeline that runs their build, test, teardown, and deployment jobs. The pipeline provides the ability to manage Lagoon environments based on the results of the runners. For example, when PRs (pull requests) are successfully tested and merged into their upstream, then a subsequent teardown process occurs which will remove the Lagoon environment after a set period of time. Automating this teardown process against redundant environments not only tidies up messy projects, but it is also more economical as it dramatically reduces your platform footprint and therefore costs.

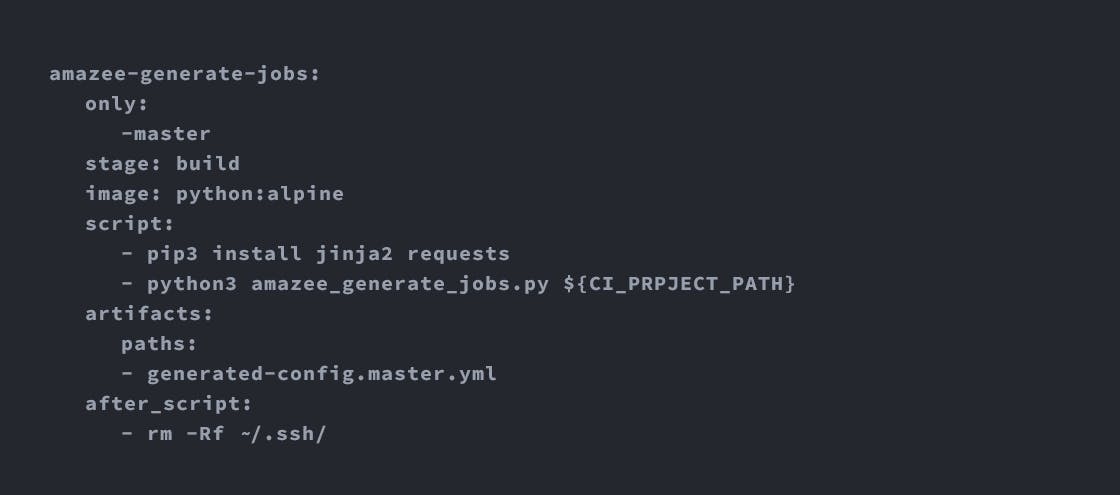

During the build job, GitLab job template files are generated and populated with data retrieved from the Lagoon GraphQL API. Synetic were able to leverage the benefits of extracting only the project and environment data that they needed upfront from Lagoon; this information is then used at later stages in the pipeline.

Deployment

Will trigger a deployment job that will execute a request to deploy a new environment, based on a merge request. (Pretty neat.)

Teardown

A teardown job is triggered that will send a request to destroy a Lagoon environment.

Lighthouse

A lighthouse stage will run post a successful deployment.

Inside these individual stages, there is a lightweight CLI deployment tool that has some nifty Python commands in there that provides integration with our Lagoon API.

Retrieving logs (get_logs_by_remote_id(remote_id))

Wait (wait_for_env_ready())

Update environments (promote_env_toprod())

Delete environments (destroy_env())

These commands essentially allow Synetic to be able to run Gitlab CI jobs that interact with Lagoon seamlessly.

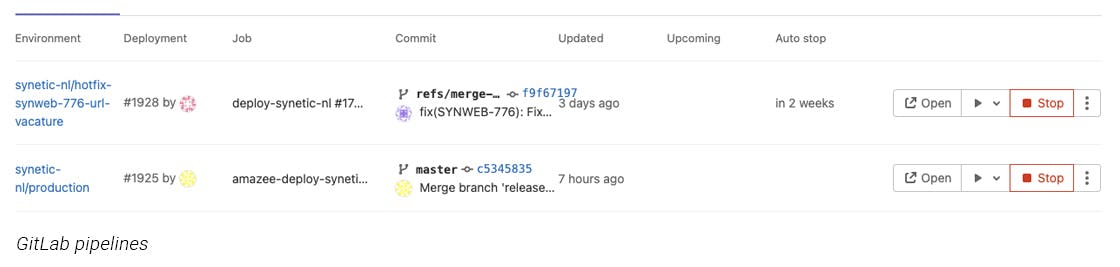

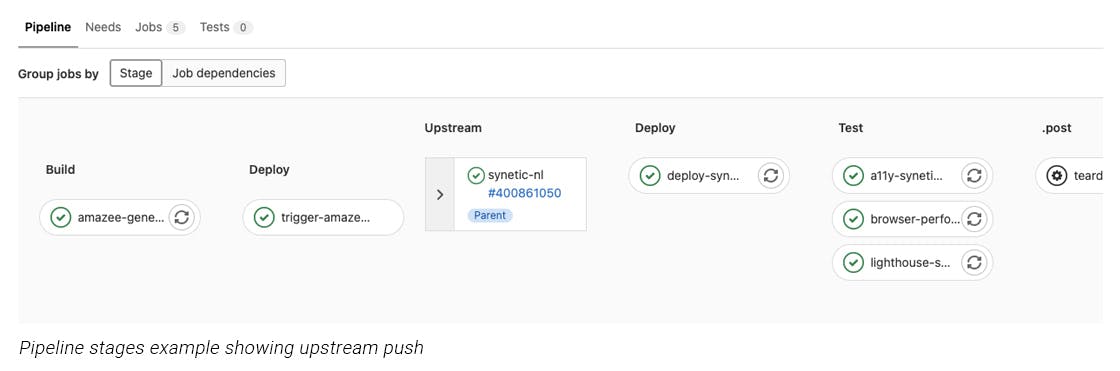

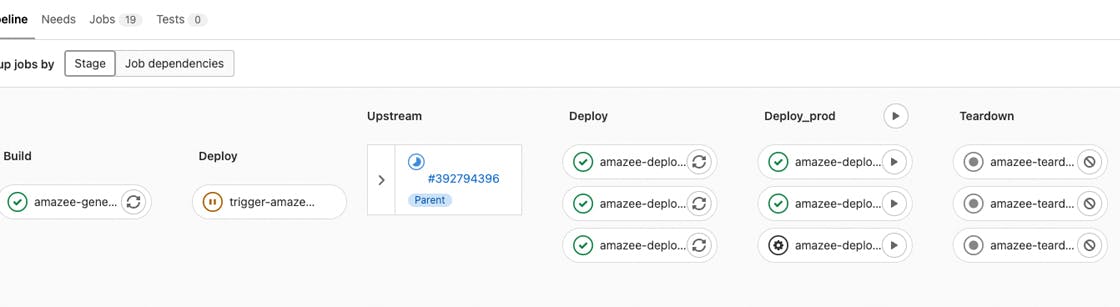

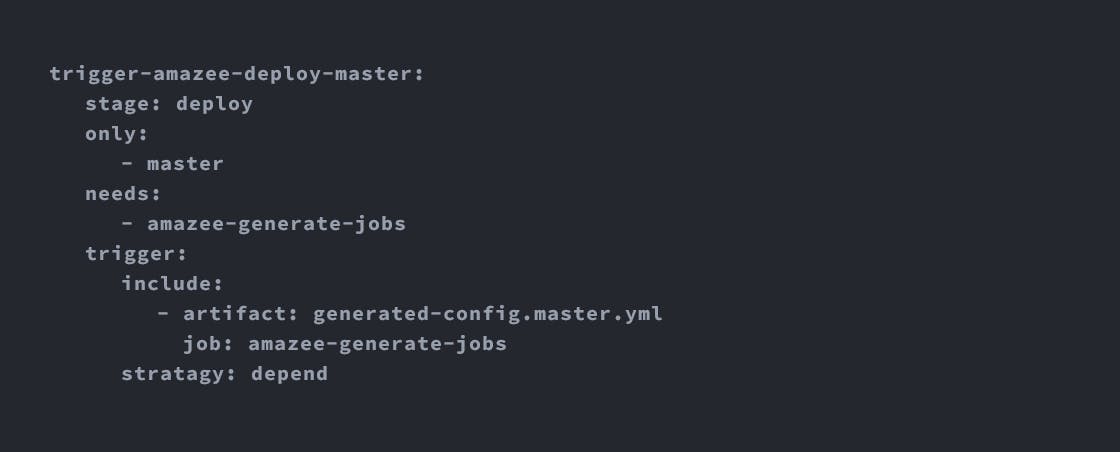

Synetic uses polysites to deploy a single repository to multiple lagoon projects. A Python script is used to generate dynamic GitLab CI/CD jobs. Those jobs are triggered by a second job that just executes the YAML as a normal GitLab-ci pipeline.

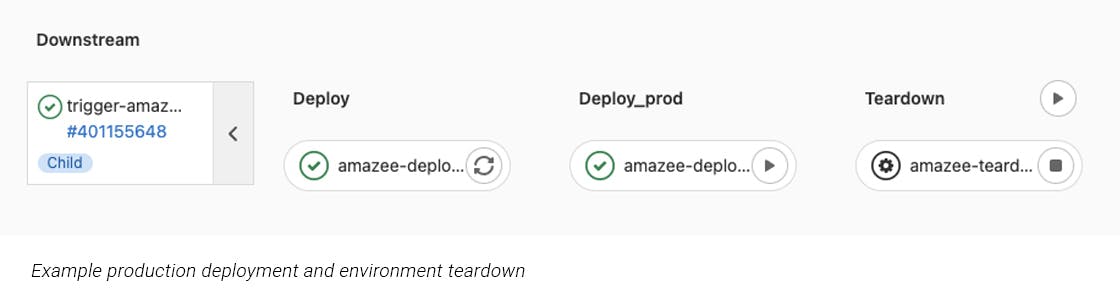

Inside the UI you will see the jobs after each other. After a successful deploy job for master, the production deploy can be activated manually.

The generate job runs for all branches we can create a pipeline for. In this case it runs for master. The job will save a generated pipeline as an artifact. This pipeline contains 3 stages:

The trigger job executes the pipeline for the branch it is generated for. Gitlab just pulls the file from the artifacts and runs it as a normal pipeline.

Using the Lagoon API, Synetic was able to both query for Lagoon environment information and fire various GraphQL mutations that run deployment and teardown tasks. This could also, however, have been implemented using the Lagoon CLI. For example, we can make use of the ‘lagoon deploy’ and ‘lagoon get’ commands to deploy, promote branches, and extract project data directly from Lagoon. On top of this, we could also run Lagoon tasks against an environment with the ‘lagoon run’ command. These tasks could include database syncs, backups, file syncing, or running the clearing caches, for example. Lagoon also allows you to define custom tasks that will run inside a shell on one of the containers in your environment.

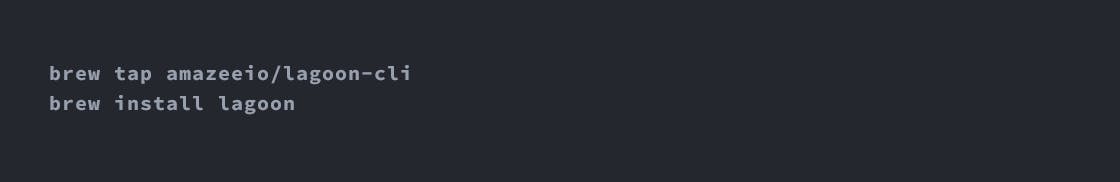

To install lagoon-cli you can use brew:

For a list of usable Lagoon-cli commands, see here.

A core aim of Lagoon is to loosen technical constraints and empower developers with the functionality they need to build the processes that work best for them. It’s great to see users such as Synetic utilise this to produce a really slick CI process.

Want to do something similar, or have another innovative idea you’re ready to try? We’re here for you! We can offer support and help you integrate your tools and processes with Lagoon. Reach out to us on amazeeio.rocket.chat. Or, get in touch with us and we’ll set up a demo.

To find out more about upcoming Lagoon features, check out our Roadmap.